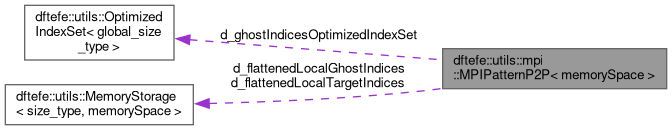

A class template to store the communication pattern (i.e., which entries/nodes to receive from which processor and which entries/nodes to send to which processor).

More...

|

| virtual | ~MPIPatternP2P ()=default |

| |

| | MPIPatternP2P (const std::vector< std::pair< global_size_type, global_size_type > > &locallyOwnedRanges, const std::vector< dftefe::global_size_type > &ghostIndices, const MPIComm &mpiComm) |

| | Constructor. This constructor is the typical way of creation of an MPI pattern for multiple global-ranges. More...

|

| |

| | MPIPatternP2P (const std::pair< global_size_type, global_size_type > &locallyOwnedRange, const std::vector< dftefe::global_size_type > &ghostIndices, const MPIComm &mpiComm) |

| | Constructor. This constructor is the typical way of creation of an MPI pattern for a single global-range. More...

|

| |

| | MPIPatternP2P (const std::vector< size_type > &sizes) |

| | Constructor. This constructor is to create an MPI Pattern for a serial case with multiple global-ranges. This is provided so that one can seamlessly use this class even for a serial case. In this case, all the indices are owned by the current processor. More...

|

| |

| | MPIPatternP2P (const size_type &size) |

| | Constructor. This constructor is to create an MPI Pattern for a serial case with a single global-range . This is provided so that one can seamlessly use this class even for a serial case. In this case, all the indices are owned by the current processor. More...

|

| |

| void | reinit (const std::vector< std::pair< global_size_type, global_size_type > > &locallyOwnedRanges, const std::vector< dftefe::global_size_type > &ghostIndices, const MPIComm &mpiComm) |

| |

| void | reinit (const std::vector< size_type > &sizes) |

| |

| size_type | nGlobalRanges () const |

| |

| std::vector< std::pair< global_size_type, global_size_type > > | getGlobalRanges () const |

| |

| std::vector< std::pair< global_size_type, global_size_type > > | getLocallyOwnedRanges () const |

| |

| std::pair< global_size_type, global_size_type > | getLocallyOwnedRange (size_type rangeId) const |

| |

| size_type | localOwnedSize (size_type rangeId) const |

| |

| size_type | localOwnedSize () const |

| |

| size_type | localGhostSize () const |

| |

| std::pair< bool, size_type > | inLocallyOwnedRanges (const global_size_type globalId) const |

| | For a given globalId, returns whether it lies in any of the locally-owned-ranges and if true the index of the global-range it belongs to. More...

|

| |

| std::pair< bool, size_type > | isGhostEntry (const global_size_type globalId) const |

| | For a given globalId, returns whether it belongs to the current processor's ghost-set and if true the index of the global-range it belongs to. More...

|

| |

| size_type | globalToLocal (const global_size_type globalId) const |

| |

| global_size_type | localToGlobal (const size_type localId) const |

| |

| std::pair< size_type, size_type > | globalToLocalAndRangeId (const global_size_type globalId) const |

| | For a given global index, returns a pair containing the local index in the procesor and the index of the global-range it belongs to. More...

|

| |

| std::pair< global_size_type, size_type > | localToGlobalAndRangeId (const size_type localId) const |

| | For a given local index, returns a pair containing its global index and the index of the global-range it belongs to param[in] localId The input local index. More...

|

| |

| const std::vector< global_size_type > & | getGhostIndices () const |

| |

| const std::vector< size_type > & | getGhostProcIds () const |

| |

| const std::vector< size_type > & | getNumGhostIndicesInGhostProcs () const |

| |

| size_type | getNumGhostIndicesInGhostProc (const size_type procId) const |

| |

| const SizeTypeVector & | getGhostLocalIndicesForGhostProcs () const |

| |

| SizeTypeVector | getGhostLocalIndicesForGhostProc (const size_type procId) const |

| |

| const std::vector< size_type > & | getGhostLocalIndicesRanges () const |

| |

| const std::vector< size_type > & | getTargetProcIds () const |

| |

| const std::vector< size_type > & | getNumOwnedIndicesForTargetProcs () const |

| |

| size_type | getNumOwnedIndicesForTargetProc (const size_type procId) const |

| |

| size_type | getTotalOwnedIndicesForTargetProcs () const |

| |

| const SizeTypeVector & | getOwnedLocalIndicesForTargetProcs () const |

| |

| SizeTypeVector | getOwnedLocalIndicesForTargetProc (const size_type procId) const |

| |

| size_type | nmpiProcesses () const |

| |

| size_type | thisProcessId () const |

| |

| global_size_type | nGlobalIndices () const |

| |

| const MPIComm & | mpiCommunicator () const |

| |

| bool | isCompatible (const MPIPatternP2P< memorySpace > &rhs) const |

| |

A class template to store the communication pattern (i.e., which entries/nodes to receive from which processor and which entries/nodes to send to which processor).

Problem Setup

Let there be \(K\) non-overlapping intervals of non-negative integers given as \([N_l^{start},N_l^{end})\), \(l=0,1,2,\ldots,K-1\). We term these intervals as global-ranges and the index \(l\) as rangeId . Here, \([a,b)\) denotes a half-open interval where \(a\) is included, but \(b\) is not included. Instead of partitioning each of the global interval separately, we are interested in partitioning all of them simultaneously across the the same set of \(p\) processors. Had there been just one global interval, say \([N_0^{start},N_0^{end}]\), the paritioning would result in each processor having a locally-owned-range defined as contiguous sub-range \([a,b) \in [N_0^{start},N_0^{end}]\)), such that it has no overlap with the locally-owned-range in other processors. Additionally, each processor will have a set of indices (not necessarily contiguous), called ghost-set that are not part of its locally-owned-range (i.e., they are pwned by some other processor). If we extend the above logic of partitioning to the case where there are \(K\) different global-ranges, then each processor will have \(K\) different locally-owned-ranges. For ghost-set, although \(K\) global-ranges will lead to \(K\) different sets of ghost-sets, for simplicity we can concatenate them into one set of indices. We can do this concatenation because the individual ghost-sets are just sets of indices. Once again, for simplicity, we term the concatenated ghost-set as just ghost-set. For the \(i^{th}\) processor, we denote the \(K\) locally-owned-ranges as \(R_l^i=[a_l^i, b_l^i)\), where \(l=0,1,\ldots,K-1\) and \(i=0,1,2,\ldots,p-1\). Further, for \(i^{th}\) processor, the ghost-set is given by an strictly-ordered set of non-negative integers \(U^i=\{u_1^i,u_2^i,

u_{G_i}^i\}\). By strictly ordered we mean \(u_1^i < u_2^i < u_3^i <

\ldots < u_{G_i}^i\). Thus, given the \(R_l^i\)'s and \(U^i\)'s, this class figures out which processor needs to communicate with which other processors.

We list down the definitions that will be handy to understand the implementation and usage of this class. Some of the definitions are already mentioned in the description above

-

global-ranges : \(K\) non-overlapping intervals of non-negative integers given as \([N_l^{start},N_l^{end})\), where \(l=0,1,2,\ldots,K-1\). Each of the interval is partitioned across the same set of \(p\) processors.

-

rangeId : An index \(l=0,1,\ldots,K-1\), which indexes the global-ranges.

-

locally-owned-ranges : For a given processor (say with rank \(i\)), locally-owned-ranges define \(K\) intervals of non-negative integers given as \(R_l^i=[a_l^i,b_l^i)\), \(l=0,1,2,\ldots,K-1\), that are owned by the processor

-

ghost-set : For a given processor (say with rank \(i\)), the ghost-set is an ordered (strictly increasing) set of non-negative integers given as \(U^i=\{u_1^i,u_2^i, u_{G_i}^i\}\).

-

numLocallyOwnedIndices : For a given processor (say with rank \(i\)), the numLocallyOwnedIndices is the number of indices that it owns. That is, numLocallyOwnedIndices = \(\sum_{i=0}^{K-1} |R_l^i| = b_l^i - a_l^i\), where \(|.|\) denotes the number of elements in a set (cardinality of a set).

- numGhostIndices : For a given processor (say with rank \(i\)), is the size of its

ghost-set. That is, numGhostIndices = \(|U^i|\).

- numLocalIndices : For a given processor (say with rank \(i\)), it is the sum of the

numLocallyOwnedIndices and numGhostIndices.

- localId : In a processor (say with rank \(i\)), given an integer (say \(x\)) that belongs either to the

locally-owned-ranges or the ghost-set, we assign it a unique index between \([0,numLocalIndices)\) called the localId. We follow the simple approach of using the position that \(x\) will have if we concatenate the locally-owned-ranges and ghost-set as its localId. That is, if \(V=R_0^i \oplus R_1^i \oplus \ldots R_{K-1}^i

\oplus U^i\), where \(\oplus\) denotes concatenation of two sets, then the localId of \(x\) is its position (starting from 0 for the first entry) in \(V\).

Assumptions

- It assumes that a a sparse communication pattern. That is, a given processor only communicates with a few processors. This object should be avoided if the communication pattern is dense (e.g., all-to-all communication)

- The \(R_l^i\) must satisfy the following

- \(R_l^i = [a_l^i, b_l^i) \in [N_l^{start},N_l^{end})\). That is the \(l^{th}\)

locally-owned-range in a processor must a sub-set of the \(l^{th}\) global-interval.

- \(\bigcup_{i=0}^{p-1}R_l^i=[N_l^{start}, N_l^{end}]\). That is, for a given

rangeId \(l\), the union of the \(l^{th}\) locally-owned-range from each processor should equate the \(l^{th}\) global-range.

- \(R_l^i \cap R_m^j = \emptyset\), if either \(l \neq

m\) or \(i \neq j\). That is no index can be owned by two or more processors. Further, within a processor, no index can belong to two or more

locally-owned-ranges.

- \(U^i \cap R_l^i=\emptyset\). That is no index in the

ghost-set of a processor should belong to any of its locally-owned-ranges. In other words, index in the ghost-set of a processor must be owned by some other processor and not the same processor.

A typical example which illustrates the use of \(K\) global-ranges is the following. Let there be a two vectors \(\mathbf{v}_1\) and \(\mathbf{v}_2\) of sizes \(N_1\) and \(N_2\), respectively, that are partitioned across the same set of processors. Let \(r_1^i=[n_1^i, m_1^i)\) and \(r_2^i=[n_2^i,

m_2^i)\) be the locally-owned-range for \(\mathbf{v}_1\) and \(\mathbf{v}_2\) in the \(i^{th}\) processor, respectively. Similarly, let \(X^i=\{x_1^i,x_2^i,\ldots,x_{nx_i}^i\}\) and \(Y^i=\{y_1^i,y_2^i,\ldots,y_{ny_i}^i\}\) be two strictly-ordered sets that define the ghost-set in the \(i^{th}\) processor for \(\mathbf{v}_1\) and \(\mathbf{v}_2\), respectively. Then, we can construct a composite vector \(\mathbf{w}\) of size \(N=N_1+N_2\) by concatenating \(\mathbf{v}_1\) and \(\mathbf{v}_2\). We now want to partition \(\mathbf{w}\) across the same set of processors in a manner that preserves the partitioning of the \(\mathbf{v}_1\) and \(\mathbf{v}_2\) parts of it. To do so, we define two global-ranges \([A_1, A_1 + N_1)\) and \([A_1 + N_1 + A_2, A_1 + N_1

+ A_2 + N_2)\), where \(A_1\) and \(A_2\) are any non-negative integers, to index the \(\mathbf{v}_1\) and \(\mathbf{v}_2\) parts of \(\mathbf{w}\). In usual cases, both \(A_1\) and \(A_2\) are zero. However, one can use non-zero values for \(A_1\) and \(A_2\), as that will not violate the non-overlapping condition on the global-ranges. Now, if we are to partition \(\mathbf{w}\) such that it preserves the individual partitiioning of \(\mathbf{v}_1\) and \(\mathbf{v}_2\) across the same set of processors, then for a given processor id (say \(i\)) we need to provide two owned ranges: \(R_1^i=[A_1 + n_1^i, A_1 + m_1^i)\) and \(R_2^i=[A_1 + N_1 + A_2 + n_2^i, A_1 + N_1 + A_2

+ m_2^i)\). Further, the ghost set for \(\mathbf{w}\) in the \(i^{th}\) processor (say \(U^i\)) becomes the concatenation of the ghost sets of \(\mathbf{v}_1\) and \(\mathbf{v}_2\). That is \(U_i=\{A_1 + x_1^i,A_1 + x_2^i,\ldots,

A_1 + x_{nx_i}^i\} \cup \{A_1 + N_1 + A_2 + y_1^i, A_1 + N_1 + A_2 +

y_2^i, \ldots, A_1 + N_1 + A_2 + y_{ny_i}^i\}\) The above process can be extended to a composition of \(K\) vectors instead of two vectors.

A typical scenario where such a composite vector arises is while dealing with direct sums of two or more vector spaces. For instance, let there be a function expressed as a linear combination of two mutually orthogonal basis sets, where each basis set is partitioned across the same set of processors. Then, instead of paritioning two vectors each containing the linear coefficients of one of the basis sets, it is more logistically simpler to construct a composite vector that concatenates the two vectors and partition it in a way that preserves the original partitioning of the individual vectors.

- Template Parameters

-

| memorySpace | Defines the MemorySpace (i.e., HOST or DEVICE) in which the various data members of this object must reside. |

A 2D vector to store the locally owned ranges for each processor. The first index is range id (i.e., ranges from 0 d_nGlobalRanges). For range id \(l\) , it stores pairs defining the \(l^{th}\) locally owned range in each processor. That is, d_allOwnedRanges[l] = \(\{\{a_l^0,b_l^0\}, \{a_l^1,b_l^1\}, \ldots,

\{a_l^{p-1},b_l^{p-1}\}\}\), where \(p\) is the number of processors and the pair \((a_l^i,b_l^i)\) defines the \(l^{th}\) locally owned range for the \(i^{th}\) processor.

- Note

- Any pair \(a\) and \(n\) define an open interval, where \(a\) is included but \(b\) is not included.

A flattened vector of size number of ghosts containing the ghost indices ordered as per the list of ghost processor Ids in d_ghostProcIds. To elaborate, let \(M_i=\) d_numGhostIndicesInGhostProcs[i] be the number of ghost indices owned by the \(i^{th}\) ghost processor (i.e., d_ghostProcIds[i]). Let \(S_i = \{x_1^i,x_2^i,\ldots,x_{M_i}^i\}\), be an ordered set containing the ghost indices owned by the \(i^{th}\) ghost processor (i.e., d_ghostProcIds[i]). Then we can define \(s_i =

\{z_1^i,z_2^i,\ldots,z_{M_i}^i\}\) to be set defining the positions of the \(x_j^i\) in d_ghostIndices, i.e., \(x_j^i=\) d_ghostIndices[ \(z_j^i\)]. The indices \(x_j^i\) are called local ghost indices as they store the relative position of a ghost index in d_ghostIndices. Given that \(S_i\) and d_ghostIndices are both ordered sets, \(s_i\) will also be ordered. The vector d_flattenedLocalGhostIndices stores the concatenation of \(s_i\)'s.

- Note

- We store only the local ghost idnex index local to this processor, i.e., position of the ghost index in d_ghostIndices. This is done to use size_type which is unsigned int instead of global_size_type which is long unsigned it. This helps in reducing the volume of data transfered during MPI calls.

A vector of size 2 times the number of ghost processors to store the start and end positions in the above d_flattenedLocalGhostIndices that define the local ghost indices owned by each of the ghost processors. To elaborate, for the \(i^{th}\) ghost processor (i.e., d_ghostProcIds[i]), the two integers \(n=\) d_localGhostIndicesRanges[2*i] and \(m=\) d_localGhostIndicesRanges[2*i+1] define the start and end positions in d_flattenedLocalGhostIndices that belong to the \(i^{th}\) ghost processor. In other words, the set \(s_i\) (defined in d_flattenedLocalGhostIndices above) containing the local ghost indices owned by the \(i^{th}\) ghost processor is given by: \(s_i=\) {d_flattenedLocalGhostIndices[ \(n\)], d_flattenedLocalGhostIndices[ \(n+1\)], ..., d_flattenedLocalGhostIndices[ \(m-1\)].